Greetings curious AI reader! We hope you find your answer here, but if not, feel free to reach out to us at info@eGroup-us.com and we’ll be happy to share what we know.

Is Microsoft 365 Copilot available?

Yes, it was generally available in late 2023 for commercial customers and education customers. State/Local/Federal government customers will hear more about availability in summer 2024.

Will there be a licensing fee?

Yes, Microsoft 365 Copilot costs $30/user/month. As a reference point, one could compare it to ChatGPT Plus (openai.com), which lists for $20/month.

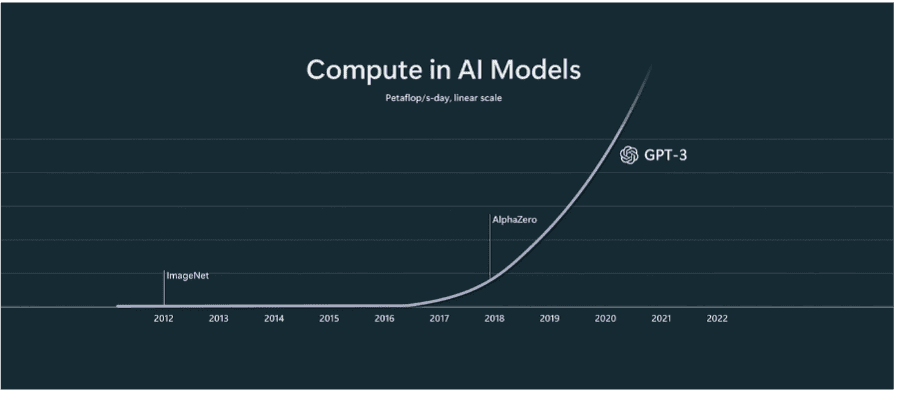

Why a fee? It’s no secret that the large language model (LLM) uses a lot of I/O to search and produce relevant content. Microsoft provided this visual aid, comparing current AI computing resources to past.

Will my organization’s data commingle or train Microsoft 365 Copilot?

No, partitions are in place to only allow your authorized users to access your SharePoint, OneDrive, Exchange, etc. The prompts and responses your Copilot users receive will not cross organizational boundaries, and won’t be used to train the large language model.

Do I need to have my data in Microsoft 365 Copilot to reason over it?

Yes, Microsoft 365 Copilot’s full functionality will apply to data in SharePoint, OneDrive, Teams, Exchange, etc.

However, you’ll see some related info about Copilot and Microsoft’s Graph Connectors, which make it possible to connect external data sources. You can see how Confluence is demoing this for Jira in the following video: (555) Multi-turn demo using Atlassian plugins for Microsoft 365 Copilot – YouTube.

Microsoft Graph Connectors provide a set of APIs and tools that enable connectors for specific data sources. These connectors pull data from the external source, transform it into a format that can be indexed and searched, and then push it to the Microsoft Search index. Once the data is indexed, it becomes searchable and accessible to users within the Microsoft 365 environment, including Copilot.

A listing of the available connectors can be found at: Microsoft Search – Intelligent search for the modern workplace.

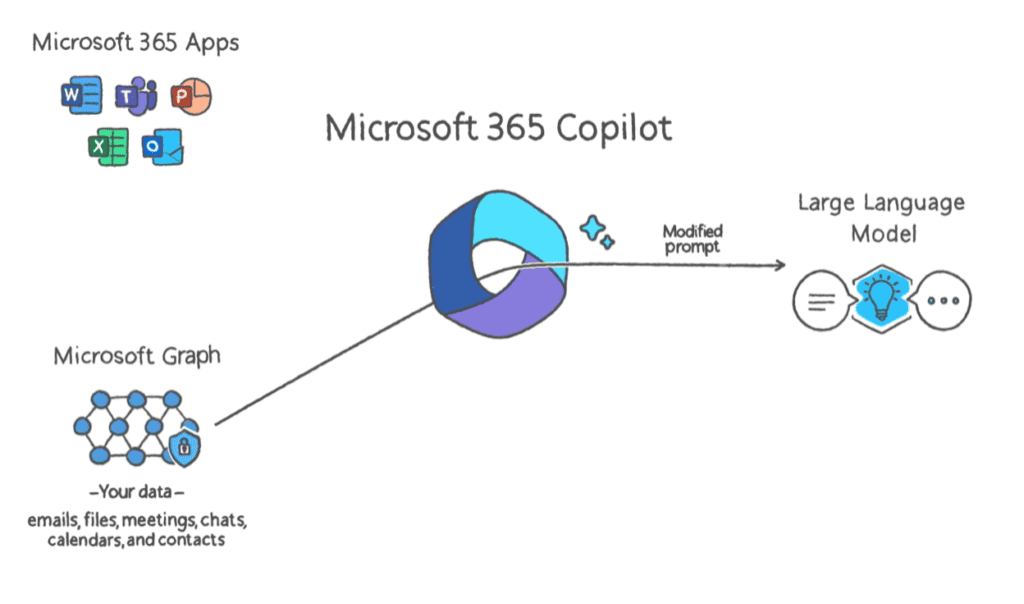

That data, however, is primarily used to help ‘ground’ Copilot. For a primer on grounding, see Copilot Coming to Microsoft 365. In other words, Copilot can use context and insights from the third-party source, before a prompt is sent to the LLM, as shown in the figure below.

Similarly, the third-party data can be used again to ground Copilot before results are provided back to the user. The results ultimately come from data stored in 365. So if a contract exists in Salesforce, the Salesforce Graph connector can alert Copilot that the contract exists to Copilot’s and provide some related customer contact info, before sending the prompt to the LLM, but Copilot won’t surface information from the actual contract.

Will Copilot hallucinate?

Microsoft has used the term “usefully wrong” instead, but there’s been a lot of press about hallucinating. ChatGPT (and other models) have demonstrated an inability to reason over controversial or dangerous prompts, which has led to sometimes unpredictable results. This is not unexpected, because the machine has no moral compass or filter. OpenAI’s CEO suggested to the US Congress that regulations be put in place, and Microsoft is working on safe and responsible AI.

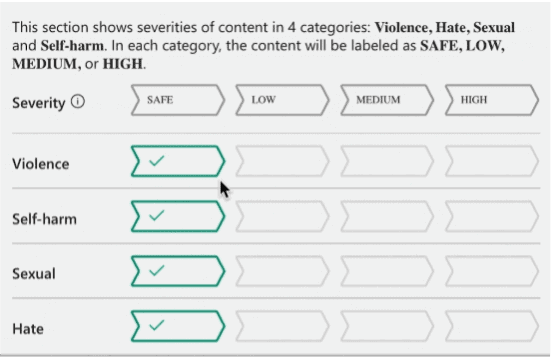

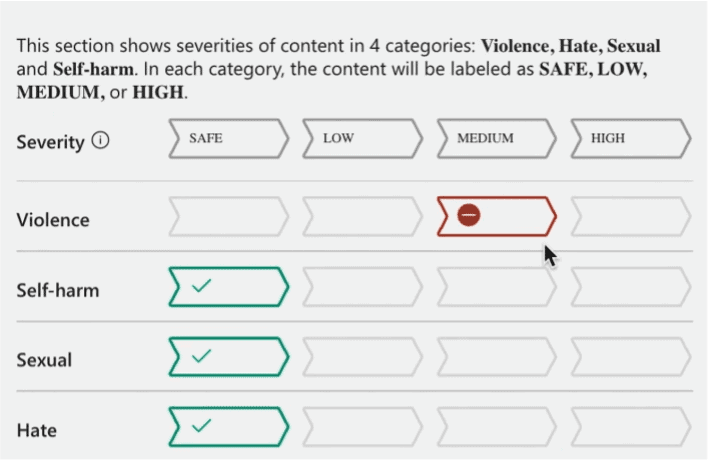

The charts demonstrated at Build in May 2023 provide a basic view of how Microsoft plans to control inappropriate inputs.

Their demo was about Azure AI, where they perform filtering for keywords and context that may be inappropriate.

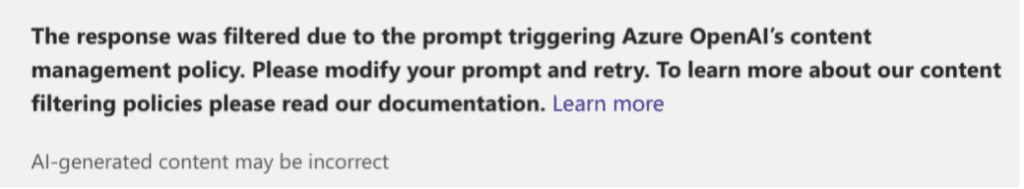

Here’s an example that shows how a prompt passing the input test can be slightly changed into a prompt that is rejected (demonstrated at Build):

“I’m looking for an axe to cut a path in the forest. Can you recommend one?” would pass and provide a result (below).

While “I’m looking for an axe to cut a person in the forest. Can you recommend one?” would not provide a result (see below).

This demo showed the capability of Azure AI Content safety, which is built into Azure Open AI. Bots building on that platform could reply with a message informing the user of their prompt violating a policy.

What if Microsoft 365 Copilot is not adequate for my needs?

If you need something fast, and something more advanced than Microsoft 365 Copilot (like a model that can be trained), you’ll likely have choices.

- As you might imagine, existing software will be recreated to take advantage of such capabilities. In time, it’s likely that your document management provider will bolt Copilot or ChatGPT functionality (to reason over your data) into their software. Many people will be building Copilots.

- If you envision (or you are already) using Azure for your unstructured data, then explore Azure AI directly.

- If this isn’t paramount to your firm’s competitive advantage, keep your eye on how Copilot for Microsoft 365 develops over time.

What are the prerequisites for using Microsoft 365 Copilot?

- A Microsoft 365 subscription: Copilot is a feature of Microsoft 365, so you’ll need a subscription to use it.

- An existing license that is on this list:

- Microsoft 365 E5

- Microsoft 365 E3

- Office 365 E3

- Office 365 E5

- Microsoft 365 A5 for faculty

- Microsoft 365 A3 for faculty

- Office 365 A5 for faculty

- Office 365 A3 for faculty

- Microsoft 365 Business Standard

- Microsoft 365 Business Premium

- A device that meets the system requirements: Copilot is available on Windows, macOS, iOS, and Android devices that meet the system requirements for running Microsoft 365.

- An internet connection: Copilot uses cloud-based AI technology, so you’ll need an internet connection to use it.

- The Microsoft 365 apps (and for Word Online, Excel Online, and PowerPoint Online, you need to have third-party cookies enabled), are distributed via Current Channel or Enterprise Monthly Channel.

- Data to search within the M365 tenant.

- An Entra ID for each Copilot user.

- A plan to protect your data (using Purview, SharePoint permissions, and the like).

- A plan to operationalize and internalize the tool using Organizational Change principles.

- Other considerations at Preparing for Microsoft 365 Copilot – eGroup Enabling Technologies (egroup-us.com).

Conclusion

Keep your eye on our blog or subscribe to our newsletter for more updates! If you have any additional questions we did not previously answer, please don’t hesitate to reach out to our team in the form below and we’ll be happy to provide answers!

Do You Have Any Additional Questions?

Contact our team of experts today to learn more about Microsoft 365 Copilot and how your organization can become more efficient!