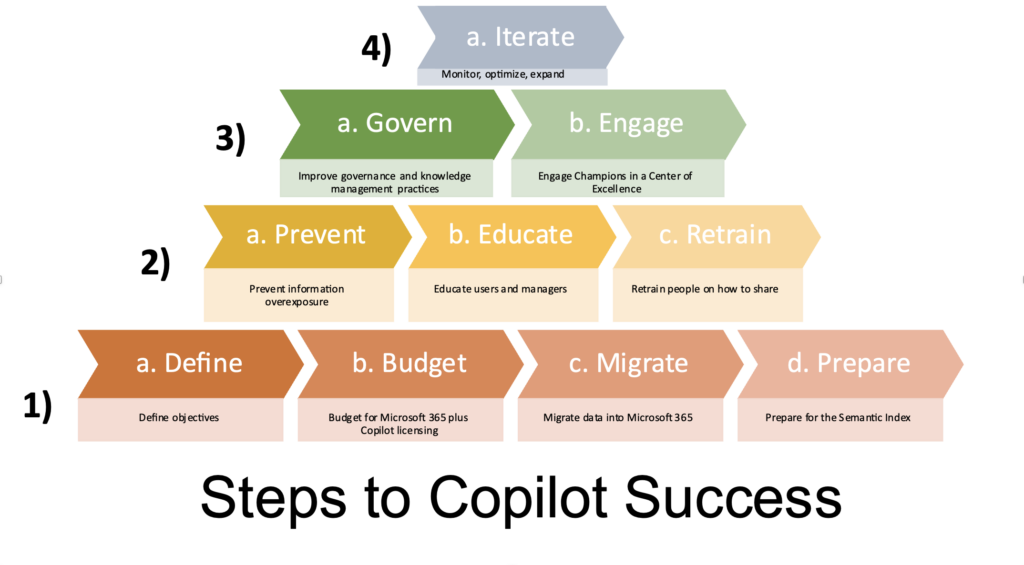

In our many engagements with Microsoft 365 Copilot, we’re starting to see a pattern of four main steps developing:

- Minimally Viable

- Critical

- Ideal

- Iterative

The steps are progressive, meaning the critical steps are stacked on top of the minimally viable steps and ideal steps are wonderful to execute after, or while progressing through the critical steps. Let’s break down each category below.

Minimally Viable Steps

Define Objectives

Generative AI is certainly helpful, and some people will inherently be able to put a finger on its subjective value – but to gauge its success, and to justify the expense of Microsoft 365 Copilot (and future AI investments), set some objectives.

Start by clearly defining your organization’s objectives and identify specific areas where generative AI can provide value. Whether it’s automating repetitive tasks, enhancing creative processes, or improving customer experiences, having well-defined objectives will guide your implementation strategy. This should include which departments will receive Copilot first, expected improvements, and how you’ll measure success (surveys, cycle time/productivity measures, etc.).

Budget for M365 E3 or E5 and Microsoft 365 Copilot Licensing

Copilot pricing is $30/user/month on top of a qualifying base Office or Microsoft 365 license. Government customers are rumored to be able to access Microsoft 365 Copilot in summer 2024.

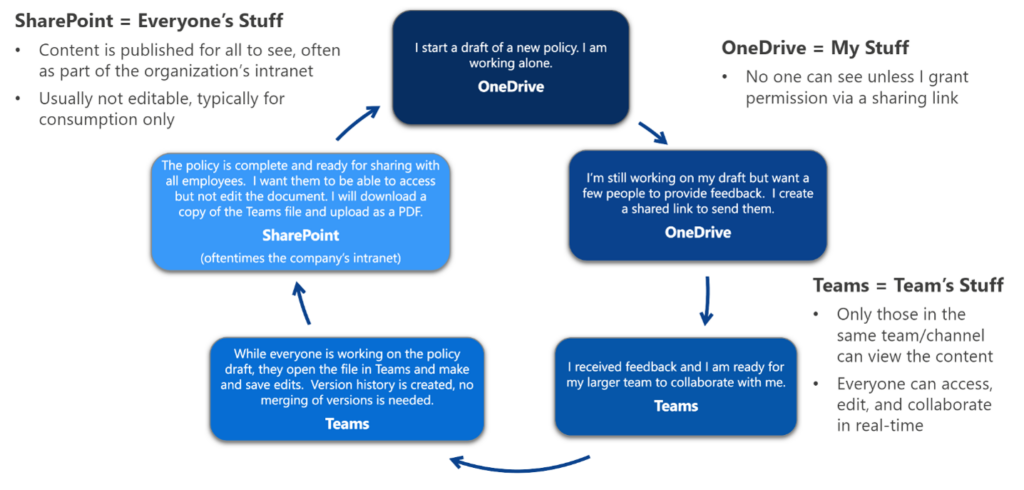

Migrate Data Into Microsoft 365

Since Microsoft 365 Copilot pulls data from OneDrive, SharePoint, Teams, etc, any data remaining on-premises or in other clouds won’t be accessible by the large language model. For tips on what types of files to move where, consider the chart below showing where orgs typically store “My” files, “Our” files, and “Everyone’s” files.

Prepare for the Semantic Index

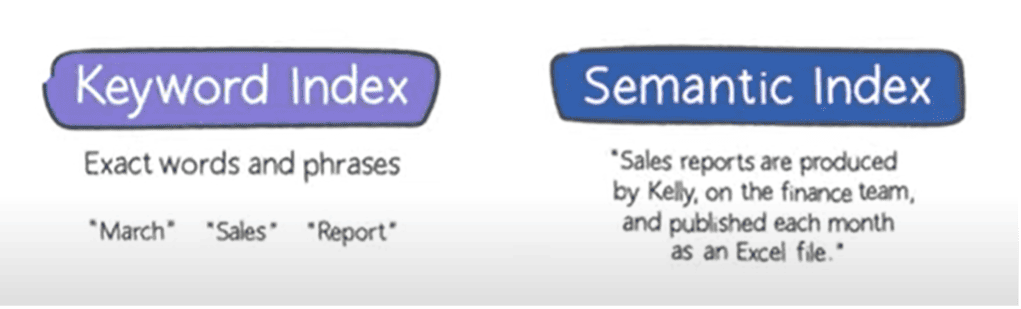

The Semantic uses AI to understand relationships between words within your tenant. It allows for quick searches through billions of vectors and returns the most relevant results in milliseconds. This feature helps users find actionable information and interact with the language model more effectively. Additionally, search results will provide context, such as your relationship to a document or person.

Comparing a typical keyword search on the left, you’d have to use specific words to find something relevant. The Semantic Index will allow a search using the same words to return much more context, as shown below.

Semantic Index for Copilot will understand the intent behind prompts and commands, producing more relevant, transparent results. Semantic Index search will also show you why you see a result, such as your relationship to a document, person, and more.

See below for an example of how it’ll help.

https://www.egroup-us.com/wp-content/uploads/2023/07/Screen-Recording-2023-07-05-at-4.14.39-PM.mov

Critical Steps

Microsoft 365 Copilot can work simply by licensing and activating the service as outlined above – but like any software, that’s only the beginning of the story. These next steps are critical for a successful outcome.

Prevent Information Overexposure

Any data that is accessible to a user within their M365 tenant is available to search and display via Copilot. Even data that they shouldn’t be able to see. Let’s say an HR executive inadvertently shared a payroll spreadsheet with “Everyone” from OneDrive, or an M&A document was loaded on SharePoint site with no privilege controls, a person asking Copilot for payroll or M&A information may be able to surface such content.

There are two steps to prevent Copilot from serving up sensitive info within files to the wrong person.

First, at the file level, use Microsoft Purview Information Protection to identify sensitive information, and then set Data Loss Prevention (DLP) policies on such content. Previous blogs describe how to discover sensitive information in your tenant and protect against its oversharing (including via searching through Copilot). To date, Purview’s been primarily used for firms with regulatory or legal requirements to protect data. Now with Copilot for M365, the same mindset applies, but more so to protect against internal oversharing.

This is easier said than done. Business input is needed to help IT identify which content (i.e. keywords or sites) are sensitive and need to be restricted. The problem isn’t insurmountable and is aided by the use of Purview Content Explorer. Yet it realistically takes 6-12 months to operationalize a DLP implementation.

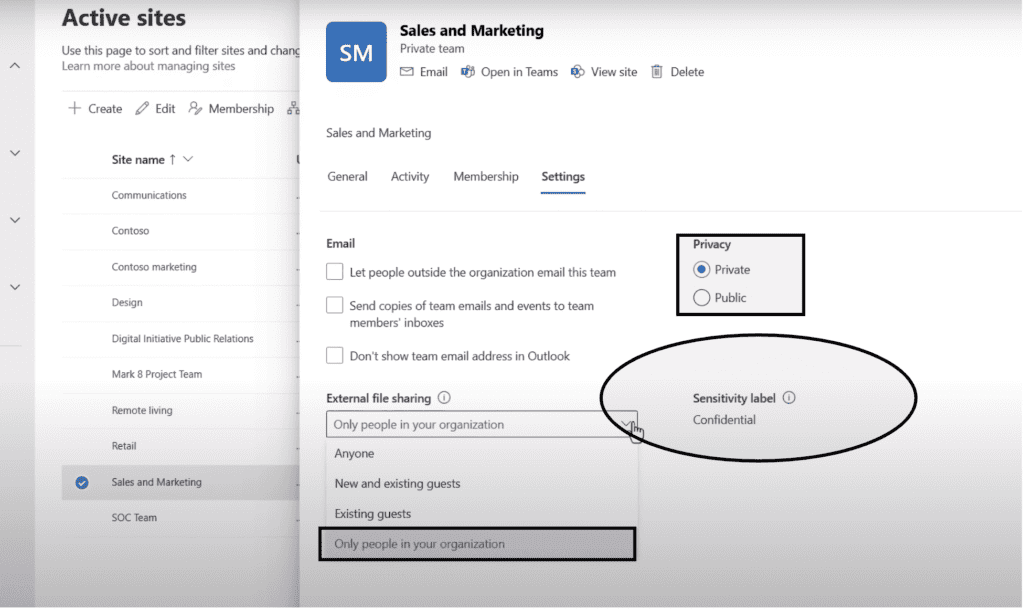

Second, at the site/Team level within SharePoint and Teams, you should audit and tighten the access to content. Some of the controls are outlined below. You can then reduce the ability to discover information on those sites.

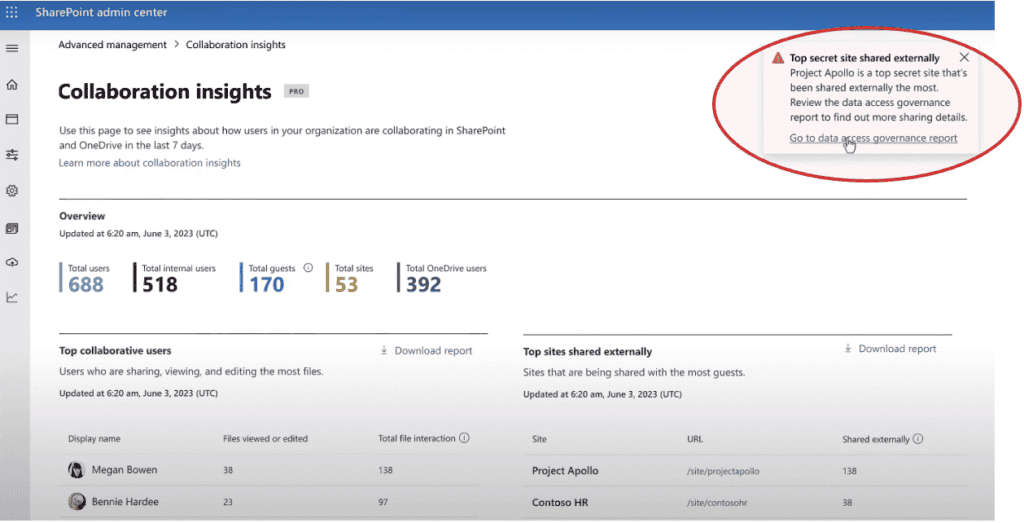

To assist in this effort, Microsoft Syntex (optional/additional license required) provides advanced capabilities to automatically find oversharing and then allow admins to put controls in place like requiring access reviews by site owners. See an example below of the type of insight Syntex can provide.

Educate Your People

People are hearing a lot about AI’s potential to alter or eliminate tasks. To what extent depends on the role and industry, but change is inevitable. For some (/many?) people, this change will be threatening, so wise organizations will take a proactive approach to handle their people with care.

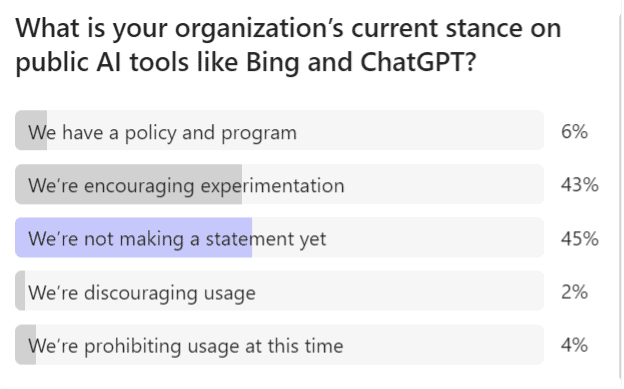

In a recent poll, respondents were quite open to using public AI tools (note the data is biased since it was taken during a Copilot webinar). This curiosity is healthy, but likely isn’t structured nor intentional. Microsoft 365 Copilot’s rollout, since it’d be a sanctioned tool for work, should be structured and intentional.

A short list of topics to explain to everyone:

- AI can be wrong – people need to check facts

- Don’t overshare sensitive files (see below)**

- Learn to write effective prompts

And managers, specifically, should:

- Use the tools and demonstrate the outcomes yourself

- Coach those that need ideas on when to use the tools. Others will gravitate naturally.

- Show people (by upskilling them and fostering their career growth), not just tell them, that the machines are not here to take their jobs

Retrain People On How To Share

This is important enough to break out from the general “education” suggestions above. People should think more intentionally about how they share files.

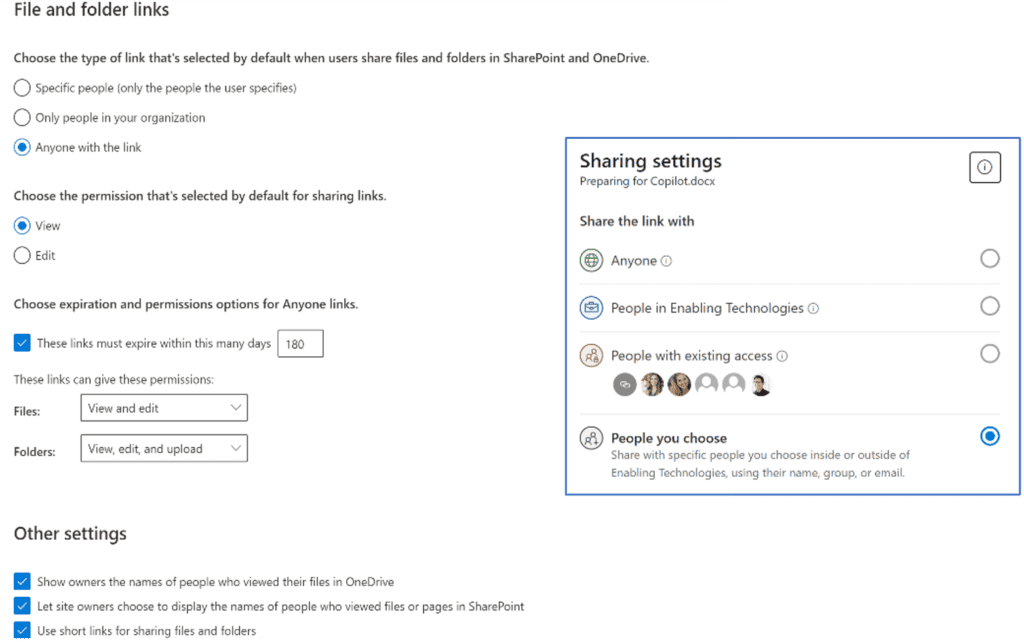

Giving people permission to a file, site, group, or team gives them access to all the content there. For instance, when someone wants to share an individual file or folder, they use shareable links. There are three primary link types:

- Anyone links give access to the item to anyone who has the link, including people outside the organization. They don’t have to authenticate and can’t be audited.

- People in your organization links work for only people inside your Microsoft 365 organization (not for guests or external participants).

- Specific people links only work for the people that users specify when they share the item.

Of the three choices, the third is the best, because it narrows the visibility to the content. The first, however, is the default, and very few people select otherwise and end up oversharing. The good news is, admins can change the default to “Specific People,” to reduce oversharing via Copilot. Learn more about doing so at Manage sharing settings for SharePoint and OneDrive in Microsoft 365.

There are several settings in the SharePoint Admin Center below to consider changing from the defaults (as shown below), which can change the user’s defaults.

Ideal Steps

To ensure that you don’t have false starts, rework, or poor first impressions, strongly consider these tactics before or as you’re rolling out Microsoft 365 Copilot.

Govern data and manage knowledge

Generative AI relies heavily on data, so ensuring its quality, accuracy, and cleanliness is crucial. Establish data collection and storage processes, use underlying tools to better manage content, and encourage data stewards in each department that are creating and revising important data.

These practices are important for many reasons. A simple example is when Copilot finds two similar documents, one with current and correct information, one with outdated or incorrect information. We don’t expect the system to inherently know which one to pull from. Having a single source of truth for the data is not trivial.

To better manage the source of truth in Microsoft 365, Viva Topics should be explored. Among other things, Topics continuously learns and improves over time through user feedback and iterative processes. As users interact with the documents in the tenant, they can provide feedback on the relevance and accuracy of the suggested topics and content. This feedback loop helps refine the AI models and algorithms, ensuring that the most relevant and valuable information surfaces to users.

Think of how ChatGPT works. Humans (and machines) train the system before we as end-users get access to its (usually) accurate information. Copilot is the equivalent to Chat-GPT’s UX within Microsoft 365. Viva Topics is the way to improve and cleanse the data that Copilot finds and produces.

Engage Champions in a Center of Excellence (CoE)

Creating an AI CoE can ensure effective AI adoption and implementation across the organization. Unlike more mundane requests CIOs have made of their business community, participation in this committee will likely be vibrant.

The AI CoE provides centralized expertise, strategic alignment, governance, skill development, collaboration, and technology evaluation. This promotes a consistent and structured approach to AI initiatives, ensures clean, secure data, mitigates risks, and ensures ethical and responsible AI practices.

More to come on this concept from our Strategic Advisory team.

Summary

Preparing for Microsoft 365 Copilot requires proactive steps to ensure a successful rollout. The process involves four categories of steps: Minimally Viable, Critical, Ideal, and Iterative.

In the Minimally Viable category, defining objectives is crucial to gauge Copilot’s success and justify the investment. Budgeting for Microsoft 365 Copilot licensing is a prerequisite. Migrating data into Microsoft 365 and preparing for the Semantic Index, a new search capability, are also necessary.

Critical steps include preventing information overexposure by using Microsoft Purview Information Protection and tightening access controls within SharePoint and Teams. Educating employees about AI’s potential and training them to share files intentionally are vital.

Ideal steps involve governing data and managing knowledge (possibly using tools like Viva Topics) and establishing an AI Center of Excellence to ensure effective adoption and implementation of AI initiatives.

Iterating these steps for additional users, or use cases, will improve results over time.

By following these steps, organizations can lay a strong foundation for a successful Microsoft 365 Copilot deployment, ensuring data security, employee readiness, and effective utilization of generative AI capabilities.

Learn more about Copilot for Microsoft 365

Interested in learning more about preparing your environment for Copilot for M365?

Contact our team of experts today to learn more!