Purview’s New AI Hub:

Reduce AI Risks and Improve AI Visibility

Tom Papahronis

CIO Advisor

I have written extensively about getting data governance programs off the ground and the fundamentals of Purview Compliance. Today, though, I would like to address the AI concerns in organizations that already have mature Purview implementations, including advanced E5 features like Endpoint DLP, Insider Risk Management, and Communication Compliance. Their AI compliance challenges are evolving from LLM data overexposure toward how to ensure people are responsibly using the AI tools they now have broad access to—including preventing sensitive data from being used outside the M365 tenant trust boundary. To help with this, Microsoft has released a new set of controls into Purview called the AI Hub (in preview as I write this). It is a one-stop location for managing Purview policies and reporting relevant to the organization’s AI usage.

This discussion applies to those who, in addition to having an E5 license or E5 Compliance add-on, have also completed the following:

- Tuned your various data classifiers.

- Onboarded your endpoints into Purview.

- Configured Insider Risk Management and Communications Compliance.

- Deployed the Purview browser extension.

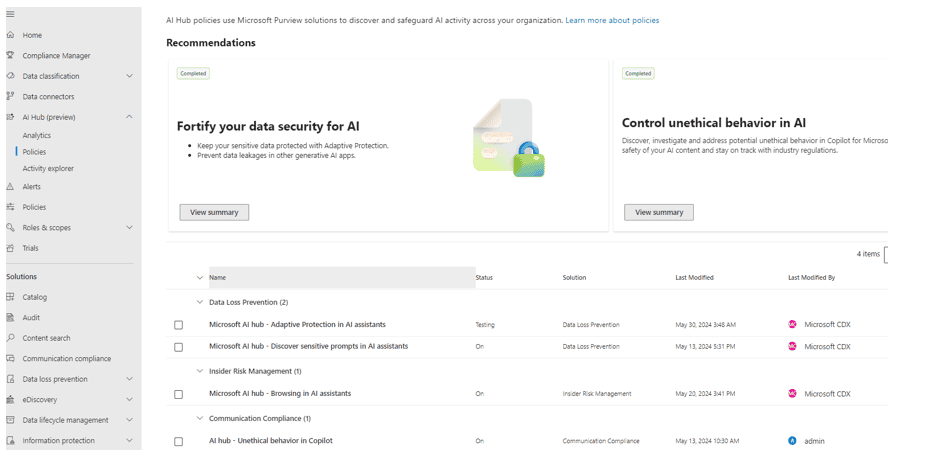

The AI Hub provides some basic AI-related policies for DLP, Insider Risk Management, and Communications Compliance. Microsoft has added parameters to each of these three feature sets to specifically detect and/or restrict AI usage in their respective policies. The default AI policies that the AI Hub creates are accessible as shown below. (All are enabled in Audit-only mode.):

New DLP Policies

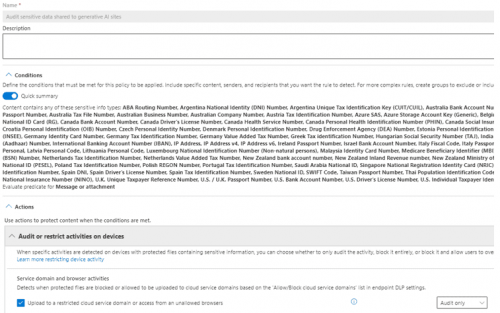

Discover Sensitive Prompts for AI Assistants

Using the Endpoint DLP functionality and the Microsoft Purview browser extension, this policy detects if any of 90+ sensitive data types are uploaded or pasted into a Generative AI site. (Microsoft maintains a pre-populated list of these sites called ”Generative AI Websites” that include the domains listed here.) Like other Endpoint DLP policies, the actions that can be taken upon detection are Audit, Block with Override, and Block.

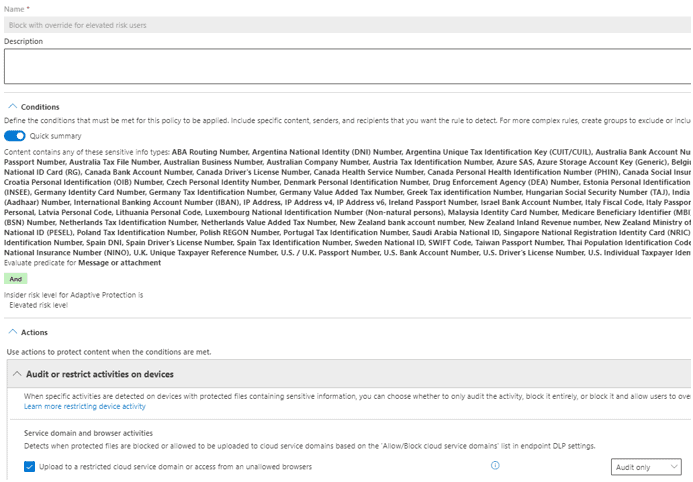

Adaptive Prediction in AI Assistants

A parameter has been added to the DLP engine that can recognize the Adaptive Risk levels from Insider Risk Management as a condition. This new default policy detects a user with an elevated adaptive risk level using a Generative AI Website and audits the activity. Again, like other Endpoint DLP policies, the actions that can be taken upon detection are Audit, Block w/ Override, and Block:

The same notifications, alerts, and other threshold capabilities available in other types of DLP policies are also available for these new AI detection policies. These AI Hub DLP policies can be used to log or prevent sensitive data from being shared outside of the Microsoft 365 tenant/Copilot trust boundary to third-party AI tools.

New Insider Risk Management Policy

Browsing in AI Assistants

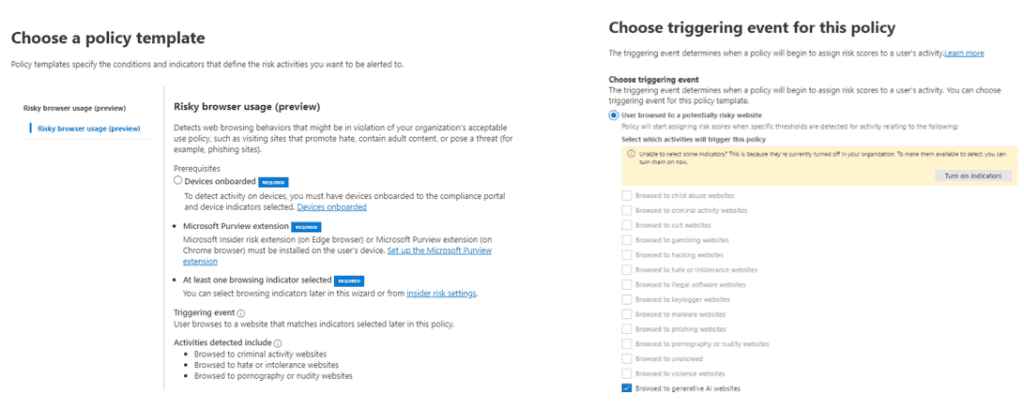

This is a new IRM policy that is part of the Risky Browser Usage policy template. It uses the “Browsed to generative AI websites” trigger event (again, using the same Microsoft-maintained list here) that detects a user browsing a generative AI website. A threshold can be set to fire an Insider Risk alert (the default is 10 instances daily):

Like other insider risk management policies, this looks at audit log data to detect risky behaviors and score users accordingly, leading to both Alerts and in determining Adaptive Risk levels. This policy can be used to audit or alert on excessive use of external AI websites if they are discouraged but not blocked.

New Communication Compliance Policy

Browsing in AI Assistants

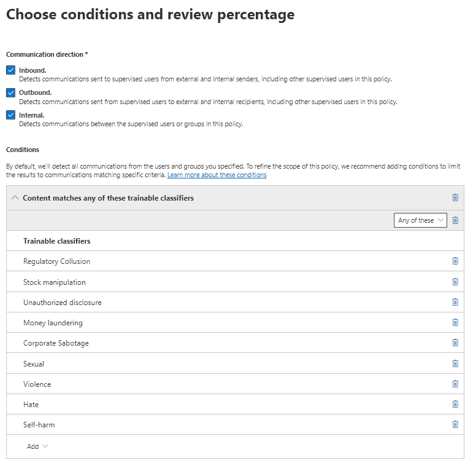

This policy reviews Copilot interactions for unethical prompts matching one of the following nine trainable classifiers, then creates a Communication Compliance alert:

- Regulatory Collusion

- Stock Manipulation

- Unauthorized Disclosure

- Money Laundering

- Corporate Sabotage

- Sexual

- Violence

- Hate

- Self-Harm

Just like other Communication Compliance policies, this looks at audit log data to determine if an alert should be fired. This policy is useful if you allow broad Copilot usage, but need to monitor for unacceptable or unethical prompting.

New AI Risk Analytics

The AI Hub created these policies as examples to help gather baseline metrics and provide something to build from so you can track and control both Copilot and third-party generative AI usage. Real-world use cases will usually be more complex, nuanced, and reflect the specific needs of the organization. Much of the success of these AI policies will rely on other existing configurations in Purview, such as custom data classifiers or sensitivity label restrictions.

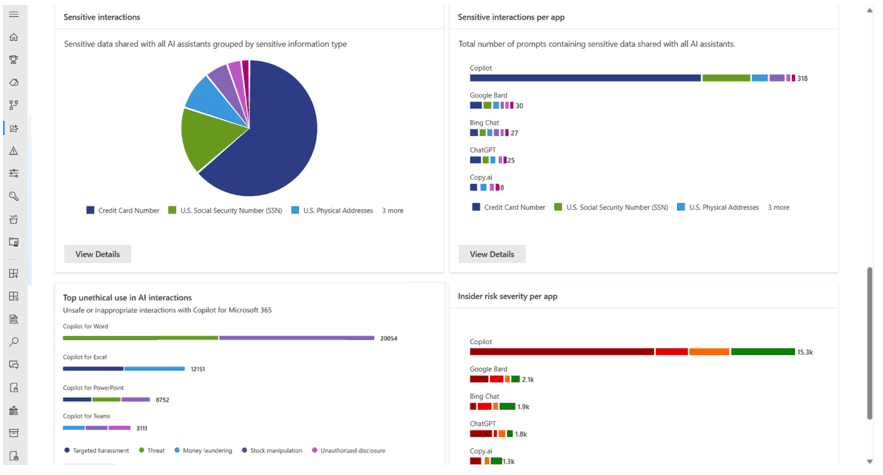

Over time, the AI Hub builds an analytics dashboard like the one below to give you a detailed overview of AI usage and risks:

Purview and AI Hub information give you the tools and metrics needed to enforce internal AI governance charters and policies while also reinforcing good stewardship of sensitive data overall. Keep an eye on these new features and capabilities. AI Hub is in preview now and will only improve as it moves into General Availability at some point soon.

We Can Help!

If you have any questions or are looking for assistance with AI compliance challenges in your organization, or helps with the new AI Hub in Microsoft Purview, please reach out to info@eGroup-us.com or complete the form below.

Have Questions or Need Help with AI Compliance or Microsoft Purview?

Contact our team of experts today!